Big language models such as the one behind ChatGPT can churn out thousands of words in just a minute. Plus, they can swiftly understand lengthy inputs. It might sound like magic, but unlike us humans, the chatbot doesn’t dissect text into individual sentences or words.

Instead, ChatGPT relies on tokens to understand and generate human languages such as English, Spanish, and more. So, in this article, let’s cover the basics – how ChatGPT tokens function, why they’re needed, and how they impact your chatting experience.

Also Read: How ChatGPT could be abused by people with nefarious intentions

What are ChatGPT tokens?

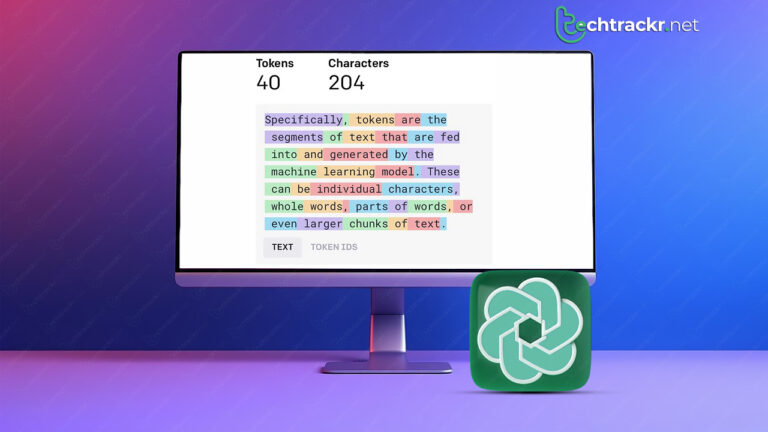

Tokens are like the LEGO bricks of any ChatGPT text response. We usually think in terms of words when organizing text, but the GPT language model doesn’t operate that way. Instead, it hunts for familiar combos of letters and clumps them into tokens.

ChatGPT tokens might sound a bit fuzzy, so let’s break it down with an example. Take the word “air” – it’s a pretty common word in everyday talk. The model has probably bumped into it quite a bit during its training sessions. Because of this, “air” gets smooshed into a single token.

But if you check out a longer and not-so-everyday word like “airline,” you’ll notice something interesting: the language model sees “air” and “line” as separate tokens.

Tokens might not be on the radar for most chatbot users, but they do affect ChatGPT’s character limit. And it’s not just about that – the language model also has a cap on how many tokens it can keep in its brain. So, if you drop a nugget of info at the start of your convo, it might slip the model’s mind after it’s processed a bunch of tokens. That’s what they call the language model’s “context window.”

How to keep track of tokens in ChatGPT?

Everyday words you find in the English dictionary usually get squeezed into a single token. But when it comes to fancier, more complicated words, they might not fit into one neat little package and could end up being a bunch of tokens all at once.

The table up there gives you a general idea, but it’s not foolproof for figuring out how many tokens a word or phrase will end up as. Let’s take numbers, for instance. Simple ones like “123” or “333” count as just one token. But when you throw in longer strings of numbers, they’ll break into multiple tokens.

According to the folks at OpenAI who made ChatGPT, they say around 100 tokens roughly match up to 75 words. But remember, that connection between word length and token count only really applies to English words.

Words from foreign languages, especially the less mainstream ones, tend to hog more tokens. Take Vietnamese, for instance. The 17 characters in the text “Bãi đậu xe ở đâu?” gobble up a whopping 13 tokens. If you wanna see how many tokens a chunk of text eats up, you can use OpenAI’s Tokenizer tool for free. It picks out the different tokens in your text and shows you what’s what.

Also Read: Claude AI is the new kid in the block; How is it different from ChatGPT?

What is ChatGPT’s token limit?

The token cap in ChatGPT varies depending on which model you’re rocking and how you’re using it – whether you’re chatting casually or hooking up with the model through code. Now, if you’re the programming type and you’re integrating GPT into your own app, you’re looking at a token cap ranging from 4,096 to 128,000. With the newer models like GPT-4 Turbo, you get beefier limits, but expect to fork out more cash per query.

If you’re not into coding, you’ll just be chatting with the bot through the website or app. A lot of folks have said that ChatGPT’s token limit sticks at 4,096. But here’s the kicker – OpenAI hasn’t officially spilled the beans on the exact limit, so it could shift without warning.

Interestingly, even if you shell out for ChatGPT Plus, which hooks you up with the latest and greatest GPT-4 model, the token limit stays put.

What are they worth?

Just like the token cap, the price per ChatGPT token varies based on which model you go for. No surprise here – the newest models come with a heftier price tag compared to the older GPT-3.5, which hit the shelves back in late 2022. Also, OpenAI tends to drop small updates to the language models that cut down on computational expenses, which in turn brings down the cost of each token whipped up.

Now that we’ve got that disclaimer covered, let’s break down how much you’ll be shelling out for ChatGPT tokens, model by model:

GPT-3.5 Turbo: Being the OG model still rocking the free version of ChatGPT, it’s on the more affordable end. Developers cough up $0.0010 for every 1,000 input tokens and $0.0020 for every 1,000 output tokens.

GPT-4: Rolled out in early 2023 not long after ChatGPT, the GPT-4 language model delivers top-notch responses. It comes in two flavors: one with an 8,096 token cap and another with 32,000. The former goes for $0.03 for every 1,000 input tokens and $0.06 for every 1,000 output tokens.

GPT-4 Turbo: Despite being the newest kid on the block, GPT-4 Turbo is all about maximizing computational efficiency compared to GPT-4. That’s why it’s priced at $0.01 for every 1,000 input tokens and $0.03 for every 1,000 output tokens. It’s a fair jump from GPT-3.5 Turbo, but its beefed-up logical skills might just justify the cost for certain users.

Also Read: ChatGPT-4 Turbo explained: What makes it the biggest update since launch

How to buy ChatGPT tokens?

If you’re just using ChatGPT casually, tokens are not something you need to worry about. But if you’re a developer or someone keen on tinkering around, you can dive into the nitty-gritty and interact directly with the core language model.

The OpenAI Playground gives you a setup akin to ChatGPT, but it’s not a free ride. Every message you send or get will hit your wallet, just like we talked about earlier with the costs. It might rack up some bills, but right now, it’s the sole option to dive deep into the full-blown language model without any pesky token caps.